Artificial Intelligence: How far will the new cycle go?

Several months ago, we published an article entitled "Tech: new bubble or new cycle?" and we clearly took the position that "a new technological cycle was beginning to take shape, with advances in semiconductors and the maturation of certain technologies related to artificial intelligence (AI)." Since then, the shares of American technology companies have risen by more than 50%1, in line with the growth of their profits. In reality, the adoption of AI assistants by households is progressing at a faster pace than previous major innovations (electricity, telephone, etc.), and from this perspective, the "hyperscalers" have significantly increased their investments and plan to do so even more. In this text, we provide elements of answers to questions regarding the sustainability of this new cycle (financing, physical limits, etc.).

Published on 11 December 2025

The race for AI scale is propelling digital giants into an era of colossal spending

For the past three years, Artificial Intelligence (AI) has been shaking up the markets with an intensity reminiscent of major turning points in the history of digital technology. However, this cycle is distinguished by its speed and, above all, by the financial weight required to support it. Hyperscalers2 are revising their investments upward quarter after quarter, AI startups are signing industrial agreements worth several hundred billion dollars3, and computing consumption is increasing at a pace that is putting global electrical infrastructures under strain4.

At the same time, adoption is becoming widespread: companies are reorganizing their budgets to integrate AI, "consumer" uses are exploding (around 800 million users per week for ChatGPT5), and the first revenue models are beginning to emerge — without yet dispelling doubts about long-term profitability.

This acceleration also revives competition: Google is redefining its strategy around Gemini, China is consolidating its domestic players, Anthropic is specializing (Claude chatbot), and new startups, supported by NVIDIA, are appearing on the scene. But behind this movement, we believe there is a less visible fragility unfolding: a financial architecture that has become circular, massive contracts backed by a very small number of clients, and a reputational concentration that poses a systemic risk to the entire sector.

Infrastructure, a matter of industrial and national sovereignty

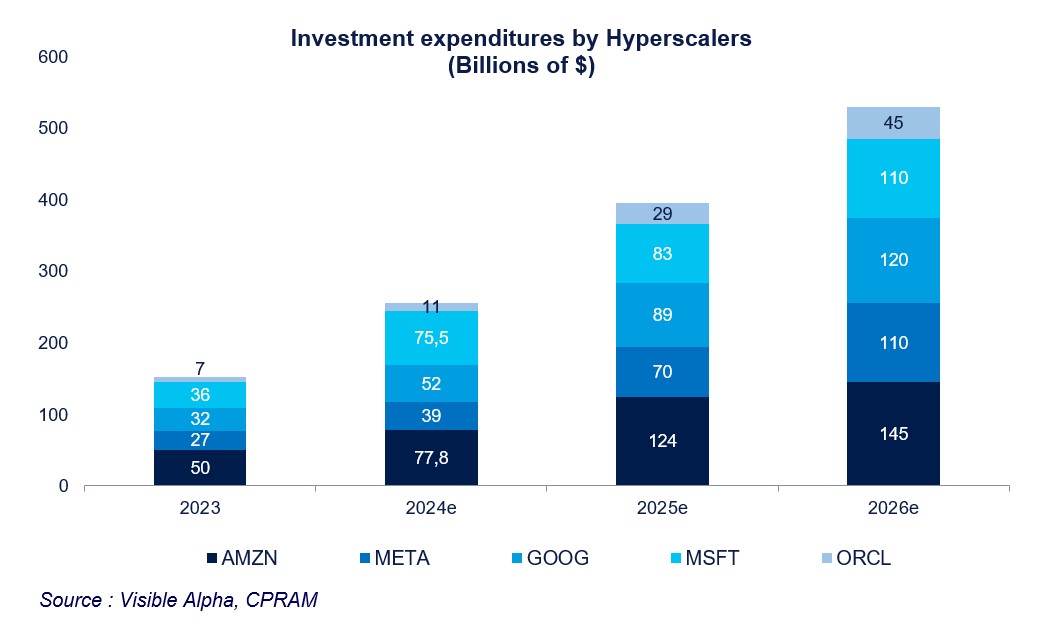

The current dynamic of the technology sector resembles a competition of brute force — a true race for scale — and the third quarter 2025 comments only confirmed this trajectory, with no sign of slowing down. Microsoft, Google, Amazon, and Meta have all warned that the coming years will require unprecedented deployments of hardware, energy capacity, and data centers. During the quarterly earnings reports, the message remained remarkably clear: spending will accelerate significantly by 2026 for all players. Alphabet mentions a "significant" increase in its investments, and Meta anticipates a more sustained growth of its investments in 2026 than in 20256.

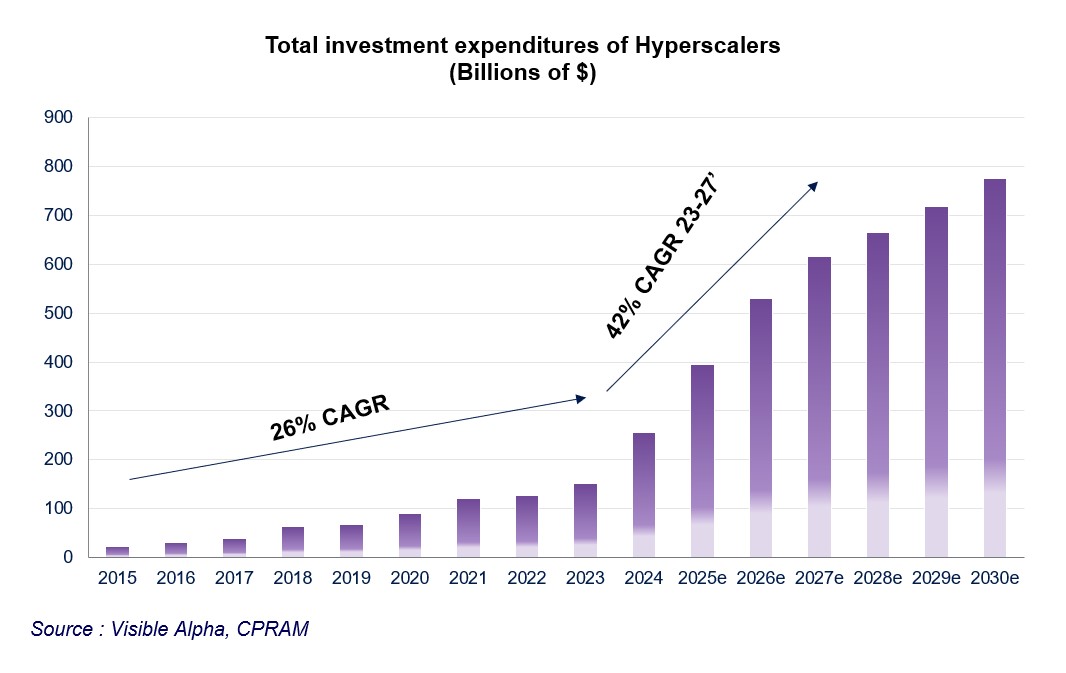

This intensification is not merely declarative: hyperscalers have raised their capital expenditure ("CapEx") expectations in response to a persistent imbalance between supply and demand in cloud and AI computing. The total expected is now approximately $395 billion for 2025 (+55% year-on-year), $530 billion for 2026 (+34%), and $615 billion for 2027 (+16%) - see the graph below. Google expects a market constrained by demand until 2026 and continues to invest "aggressively" to meet the needs of its cloud customers7. Sundar Pichai (CEO of Alphabet) has reportedly asked his teams to double computing capacity every six months. As for Amazon, it claims to have added more than 3.8 gigawatts (GW) over the past twelve months — "more than any other cloud provider" — doubling its capacity compared to 2022 and aiming for another doubling by 2027, which would imply adding approximately 18 GW more6.

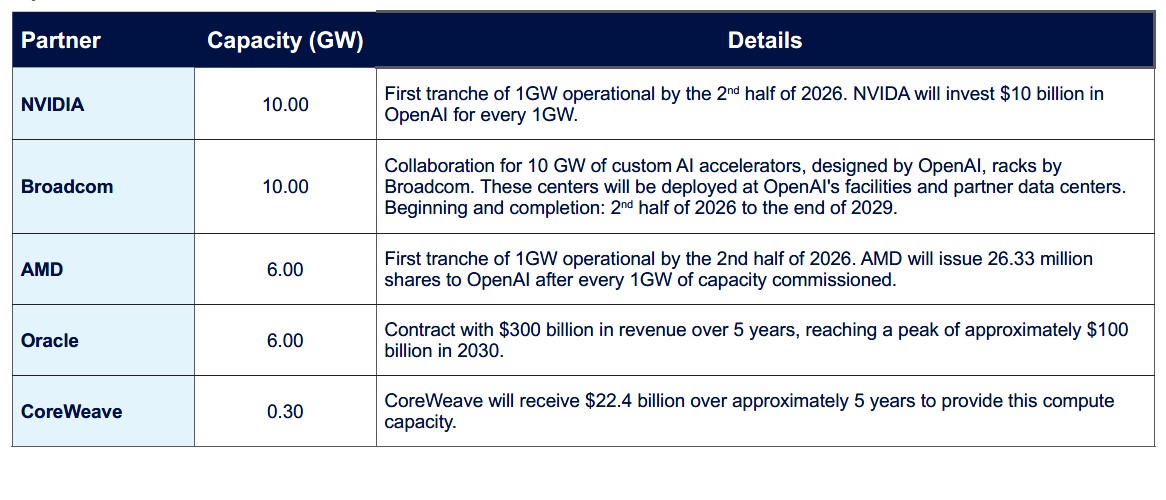

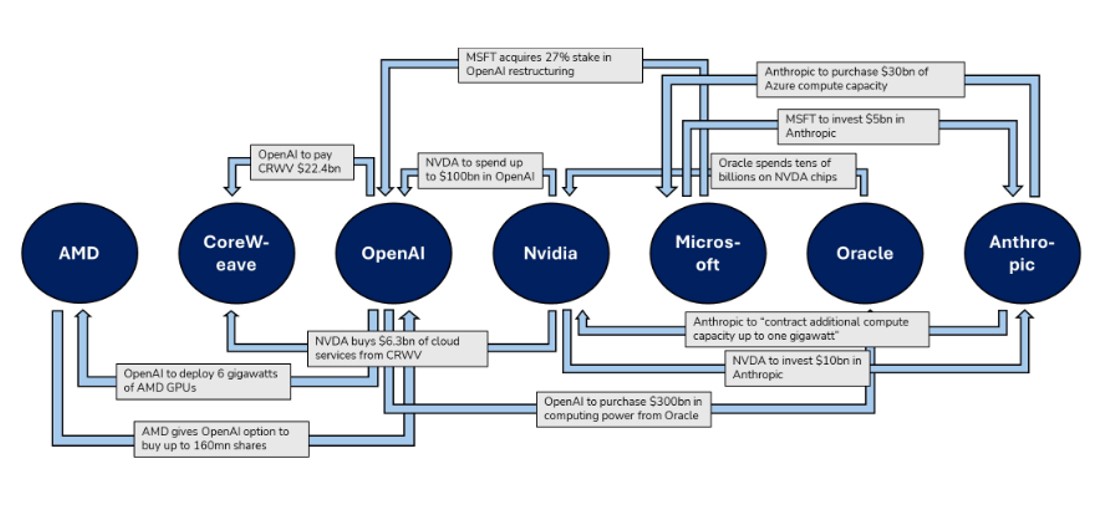

In this already tense landscape, OpenAI's acceleration in recent months has added a new, almost dizzying dimension to the global race for computing power. Since September, a series of contracts worth tens, even hundreds of billions of dollars, has reshaped the industrial geography of AI. At the center of this web, two players are predominant: OpenAI, whose ChatGPT remains the reference platform for language models, and NVIDIA, the chip designer. OpenAI's trajectory is simple to state but heavy with consequences: the demand for computing power is exploding, driven both by the training of increasingly ambitious models and by usage growth that exceeds the most aggressive forecasts. Contrary to the widespread idea after the DeepSeek episode that money was no longer a limiting factor, reality is now clearly imposed: size is everything, and size is expensive. Ongoing projects involve several tens of gigawatts of new capacity; the order of magnitude of related investments is now measured in trillions of dollars. OpenAI has already committed to an additional 32 gigawatts, in addition to the roughly 2 gigawatts provided by Microsoft Azure, Google Cloud, or Oracle. Applying an average cost of about 50 billion dollars per gigawatt to build, integrate, and network these infrastructures, the theoretical bill would reach 1.6 trillion dollars — an amount that, taken alone, rivals the market capitalization of the largest global technology groups.

OpenAI: Contracts announced in Q3

The scale and pace of contractual commitments, extremely rare on an industrial scale, nevertheless raise a question: is this hyper-aggressive strategy based on sufficiently solid fundamentals? In any case, it seems to us that it increases the vulnerability of an ecosystem already under strong financial and energy pressures. The increasing circularity of flows linking it to its suppliers creates a risk of extreme concentration around OpenAI. For example:

- On the infrastructure side; a $300 billion framework agreement with Oracle over five years and $1.6 trillion in commitments.

- On the financing side: up to $100 billion in capital from NVIDIA conditioned on orders per gigawatt, and a potential option for AMD to issue up to 10% of its capital in exchange for 6 GW of GPU capacity.

It seems to us that these arrangements create a network of dependencies where the supplier becomes an investor, and the investor becomes a client, blurring the line between real demand and demand stimulated by financial engineering.

Financing: The use of debt and off-balance-sheet items

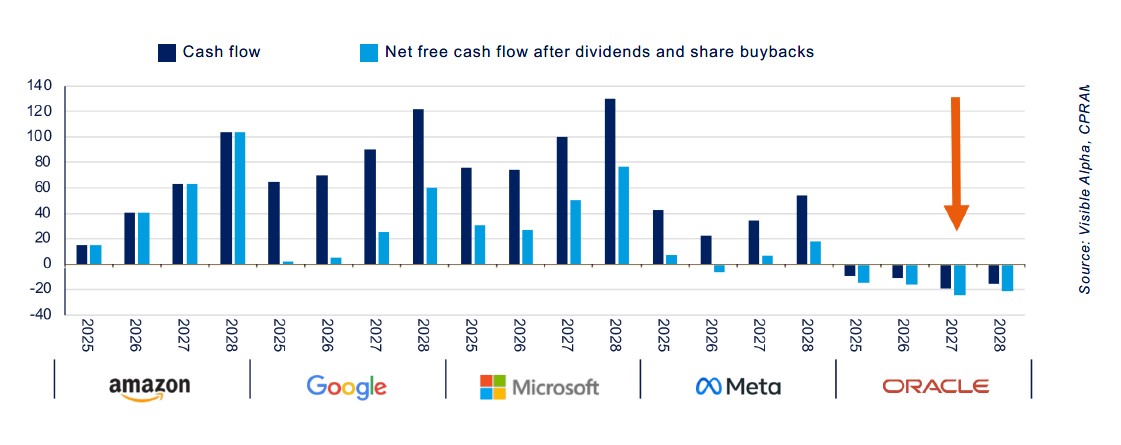

The rise of AI is also becoming a financing equation. Debt issuances by hyperscalers and the parallel growth of private and off-balance-sheet financing have recently overwhelmed the markets, causing ripples throughout the entire capital chain. Indeed, even though the cash generation capacity of large tech platforms remains high on the surface, the reality is more nuanced once dividends and share buybacks are deducted: the cash available to support massive infrastructure spending is lower than it appears. This results in two visible effects: on the one hand, very large bond issuances — the latest operations in the tens of billions by Oracle, Google, and Meta illustrate this trend — and on the other hand, a proliferation of off-balance-sheet structures (Special Purpose Vehicles - SPVs, private financing) that complicate the mapping of commitments and concentrate risks that are not apparent at first glance.

This restructured financial architecture is not without areas of fragility. It seems to us that Oracle appears as the most exposed case: in several sensitivity scenarios, it is the company most likely to face tangible financing needs in 2026–2027. It is also weakened by an ultra-concentration of its order book around OpenAI, making Oracle dependent on its commercial success. It seems to us that Meta, for its part, shows signs of pressure, even though its recent liquidity position — after a significant bond issuance — gives it some room for maneuver. Google remains, for its part, better positioned in terms of residual liquidity in most simulations, while Amazon and Microsoft overall maintain a more robust free cash flow profile. Alphabet, Amazon, and Meta are rated AA+, AA, and AA- respectively by S&P: their issuances have been largely oversubscribed, with securities of this quality being highly sought after by bond investors. Demand in the Investment Grade (IG)8 segments is currently very strong, and these securities provide exposure to themes that bond investors had not previously accessed.

To meet these needs, financing structures are diversifying: beyond public IG issuances and private rounds, the role of vendor financing, leasing contracts for datacenter shells9, and off-balance-sheet vehicles has significantly increased. These arrangements reduce the initial cash outlay but concentrate counterparty risk and shift exposure to sometimes opaque contractual horizons. The cost of an AI datacenter, which can reach 50–60 billion dollars per gigawatt and be worth up to three times the price of a non-AI center, largely explains why players seek to distribute, spread out, or outsource part of the financial burden.

Monetization: Increase in tokens, what about profits?

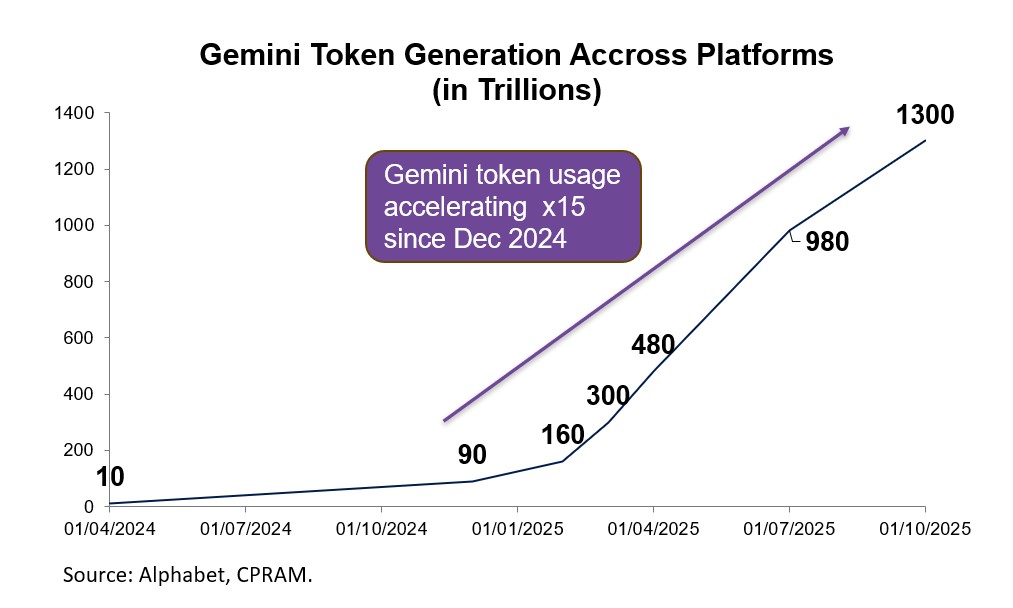

One of the most tangible indicators of the rapid adoption of AI remains token10 consumption, whose growth has taken on an industrial scale. OpenAI now processes more than 6 billion tokens per minute, a volume twenty times higher than that observed just two years ago. Google, for its part, estimates processing about 1.3 quadrillion tokens per month across all its services. This dizzying inflation is not just a technical detail: it reflects a massive shift in usage11.

This dynamic is also reflected in subscriptions. OpenAI currently claims about 800 million weekly users and 35 million paying subscribers to the Plus or Pro plans — a figure that already places it among the largest subscription platforms in the world12. The company's internal projections suggest a potential of 220 million paying subscribers within five years, if the conversion rate continues to grow.

OpenAI13 now reports an annualized revenue of about 20 billion dollars and aims for a significant increase in its revenues by 2030. Google is following a similar trajectory: the deep integration of Gemini into Android, Chrome, and Workspace offers it an almost automatic distribution effect, creating a user base that now exceeds 650 million active monthly users14.

At the same time, a new monetization territory is beginning to emerge: AI agents. These systems capable of performing complete tasks — booking, purchasing, report generation, managing business tools — pave the way for more refined revenue models: billing per action, transaction commissions, or subscriptions for specialized agents. AI giants are already testing these models, particularly in online commerce, administrative management, and professional services. For OpenAI, Google, or Anthropic, these agents could constitute a second wave of monetization, potentially more significant than subscriptions, as they are directly indexed to the value generated for the user.

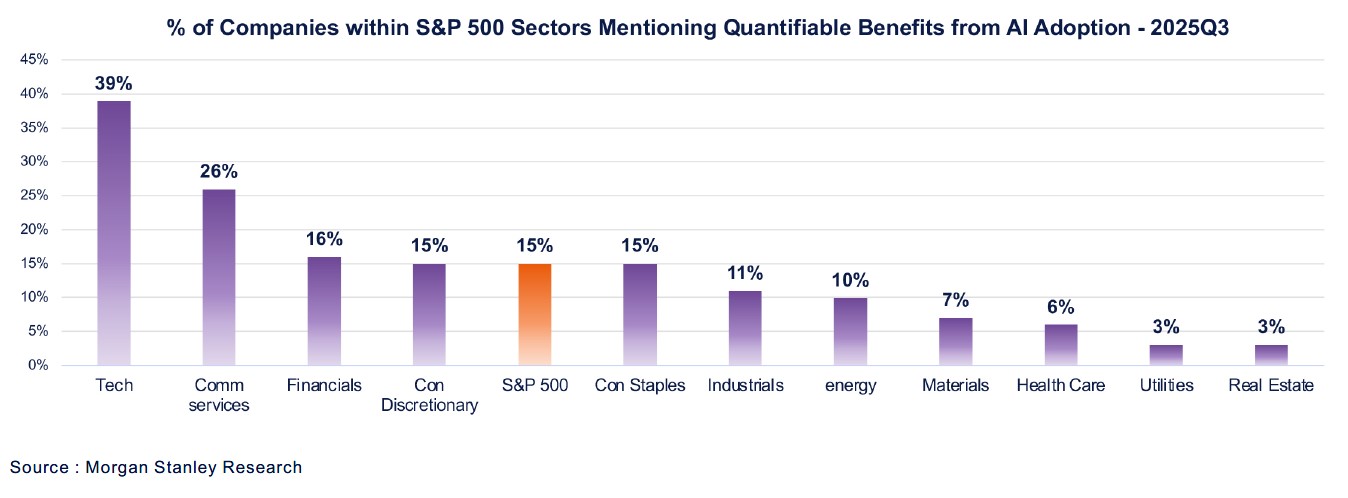

Enterprise adoption is also progressing rapidly. Recent surveys of CIOs (Chief Information Officers) show that initial hesitations have disappeared: nearly 63% of them now say they have a dedicated AI budget15, and 15% of S&P 500 companies are already able to quantify the impact of AI.

We believe that the whole forms a landscape where AI is no longer a technological add-on, but a rapidly expanding business model: sharply increasing usage, massive subscriptions, new transactional revenues, and accelerated penetration into business processes. Multiple monetization methods, still imperfect, but already unprecedented in scale for such a recent technology. Nevertheless, competition will be fierce among the various players, and not all will achieve the same level of success.

Energy, the real limit of AI?

What is often described as a "race for size" is largely a race for electricity. After two decades of almost stagnant electricity demand — digitalization having been offset by efficiency gains — forecasts have suddenly changed. According to the U.S. Department of Energy, data centers accounted for 4.4% of U.S. consumption in 2023; they could reach 7 to 12% as early as 2028, representing an annual growth between 13% and 27%16. On a global scale, the International Energy Agency17 anticipates a doubling of demand by 2030, approaching 950 TWh, equivalent to Japan's total electricity consumption. Hyperscalers are the main drivers of this dynamic: Alphabet, Meta, and Microsoft have seen their consumption grow by more than 25% per year18 since 2017, even though these figures mostly reflect the rise of the "traditional" cloud.

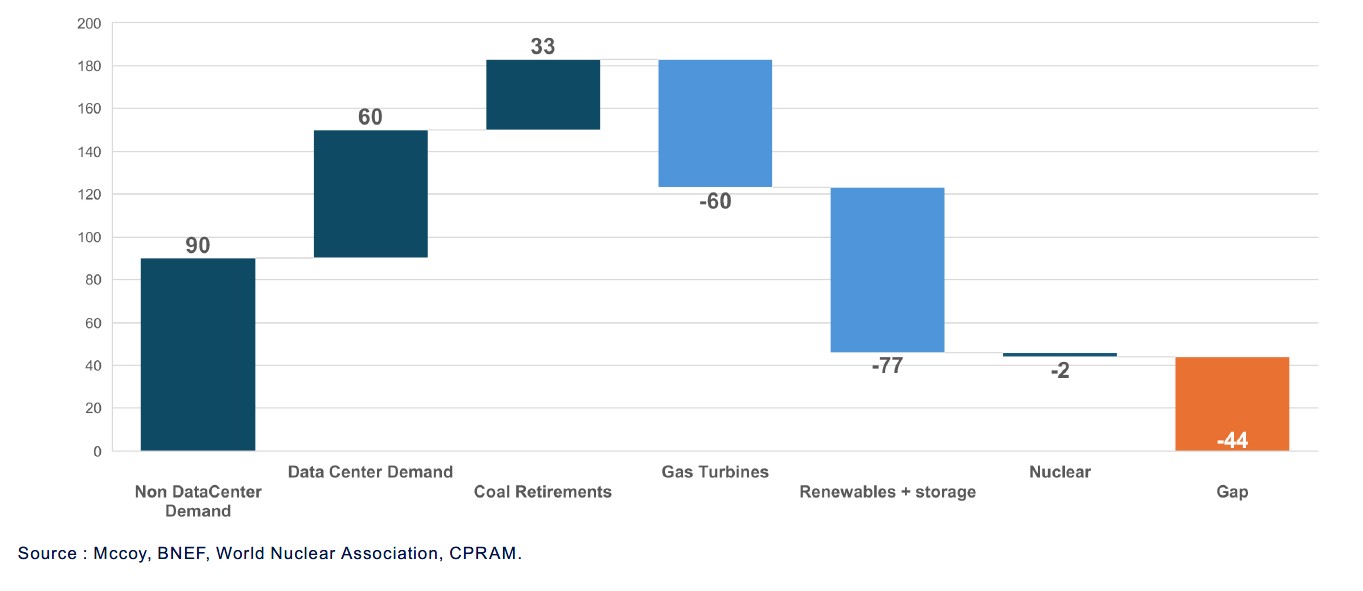

However, the issue does not lie solely in the availability of electricity production. It is the capacity to deliver electricity that is now saturated. In the United States, waiting times to connect to the grid exceed five years19, compared to two to three years to build a data center. The absence of federal planning, environmental constraints, cost allocation conflicts, and the fragmentation of regulatory authorities have created a major bottleneck: it sometimes takes more than a decade to build a new high-voltage line. As a result, more than 160 GW20 of connection requests have been submitted to U.S. utilities, a figure that includes double counting but clearly reflects the scale of the imbalance. Added to this are the physical limits of the current energy mix: heavy gas turbines, the core of energy backup in the United States, are now out of stock until 202821, after twenty years of underinvestment. Nuclear SMRs, heavily publicized, will not be ready before the next decade. The generator market — still a Plan B — shows delivery times of 2 to 3 years. Combining these elements, our analysts estimate that a structural deficit of 40 GW could appear in the United States as early as 2028, a kind of "energy wall" to overcome.

Supply/Demand Imbalance (GW)

The importance of the AI cycle for the U.S. economy

The innovation and investment cycle related to AI described previously is very important for the American economy on several levels.

- The spectacular stock market performance of tech giants over the last decade means they are now the largest American capitalizations: the "Magnificent Seven" represented about 36% of the S&P 500 at the end of November, or nearly 70% of the American GDP22. The very strong stock market growth of technology companies in recent years (while that of other companies has been weak) has induced powerful "wealth" effects that have stimulated consumption. Conversely, we believe that a correction of these stocks would have very negative repercussions for growth.

- The increase in capital investment spending by hyperscalers since the summer of 2024 alone represents around 0.6% of the American GDP23. The strong rise in data center construction spending in the United States over recent years has helped limit the contraction of non-residential construction.

- Private investments in software and computer equipment have been so strong recently that they explained 92% of American growth in the first half of 2025, even though this segment represents only 4% of GDP24. However, it is necessary to take into account the fact that a good portion of these investments is made with imported equipment (and that the "real" contribution is therefore lower).

- A number of large companies have announced plans for job cuts linked to productivity gains enabled by AI. Several studies are beginning to show a link between exposure to AI and changes in unemployment at the sectoral level.

The question of AI's impact on the economy in general is becoming one of the most unavoidable topics in current debates. This can be seen, for example, in the communications of the Fed. During the year 2025, members of the Fed Board gave 9 speeches including the term "Artificial Intelligence" in their titles, more than in the previous 5 years combined. The minutes of the FOMC (Federal Open Market Committee)25 from October 2025 contain the terms "AI" or "Artificial Intelligence" 8 times, which had never happened before. Mentions of AI are linked to various topics: stock market valuation, impact on growth via investments in data centers, productivity gains allowing companies to preserve margins, consequences on the labor market. This illustrates how developments related to AI can have a macroeconomic character.

Conclusion

In April 2024, we wrote a text affirming our conviction that the spectacular stock market performance of Tech was not explained by the formation of a bubble but rather by the birth of a new cycle, particularly with the maturation of certain technologies related to artificial intelligence. Since then, this cycle has continued its development, and hyperscalers have embarked on a race for scale with investments that can now be described as macroeconomic in size. Of the three risks identified today, namely financing, monetization, and physical limits, the order of concern in our view is probably: physical limits followed by monetization, then bond financing.

While visibility on the demand they will face is high, questions about the feasibility of projects should appear episodically over the coming quarters, which would lead to episodes of quite high volatility. It will probably be necessary to approach this cycle with greater selectivity and room for maneuver in order to be able to reposition in case of a marked decline.

Nevertheless, we remain confident in the development of the technological cycle related to AI and, moreover, we will communicate more about its various aspects in 2026, particularly regarding the actual trajectory of AI development versus the current announcements from the different players.

1. Between April 2024 and the end of November 2025

2. Cloud service provider with a very large-scale infrastructure.

3. OpenAI press release

4. Source: IEA -

5. Source: https://techcrunch.com/2025/10/06/samaltman-says-chatgpt-has-hit-800m-weekly-active-users/

6. Source: Earnings Calls, Q3 2025.

7. Source: Visible Alpha, CPRAM.

8. Investment Grade (IG): (investment quality rating) refers to the quality category assigned by rating agencies to bonds and bond issuers.

9. Prefabricated data center leasing contract. The exact definition: “Prefabricated data centers are facilities whose external structure is completed, with power supply and connectivity available, but whose interior is left unfinished and must be fitted out by the client.”

10. Text units processed by an AI model.

11. Source: Sam Altman (OpenAI CEO), Google Earnings call October 2025.

12. Source: The Information, Google, OpenAI

13. Source: https://www.youtube.com/watch?v=Gnl833wXRz0

14. Source: The Information, Google, OpenAI.

15. Source: KPMG 2025 CFO & CIO Collaboration Survey

16. 2024 United States Data Center Energy Usage Report, Dec 2024.

17. Source: World Energy Outlook 2025 -

18. Google, Microsoft and Meta 2025 Sustainability Reports

19. Source: DOE

20. Wood Mackenzie: https://www.woodmac.com/press-releases/us-utility-large-load-commitments-reach-160-gw-amid-unprecedentedpjm-demand-surge/

21. Siemens Energy, CPRAM.

22. CPRAM as of 11/30/2025

23. Source: Company financial documents and BEA for US GDP

24. Source: BEA.

25. FOMC: Federal Open Market Committee. Monetary policy committee of the United States Federal Reserve (FED): https://www.federalreserve.gov/monetarypolicy/fomcminutes20251029.htm